Tip

This is the documentation for the 22.06 version. Looking for the documentation of the latest version? Have a look here.

Introduction¶

TNSR is an open-source based packet processing platform that delivers superior secure networking solution performance, manageability, and services flexibility. TNSR can scale packet processing from 1 to 10 to 100 Gbps, even 1 Tbps and beyond on commercial-off-the-shelf (COTS) hardware - enabling routing, firewall, VPN and other secure networking applications to be delivered for a fraction of the cost of legacy brands. TNSR features a RESTCONF API - enabling multiple instances to be orchestration managed - as well as a CLI for single instance management.

TNSR Secure Networking¶

TNSR is a full-featured software solution designed to provide secure networking from 1 Gbps to 400 Gbps. TNSR is a viable option for users with moderate bandwidth needs to the demanding requirements of enterprise and service providers.

Each licensed instance comes bundled with TNSR Technical Assistance Center (TAC) from the 24/7 world-wide team of support engineers at Netgate.

Visit tnsr.com for details on TNSR availability and pricing.

TNSR Architecture¶

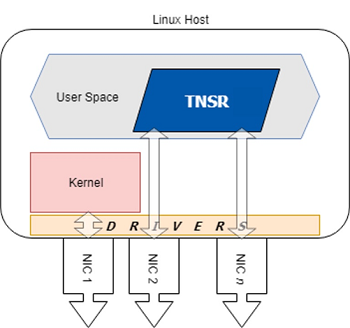

TNSR runs on a Linux host operating system. Initial configuration of TNSR includes installing associated services and configuring network interfaces. It is important to note that network interfaces can be managed by the host OS or by TNSR, but not by both. In other words, once a network interface is assigned to TNSR, it is no longer available - or even visible - to the host OS.

A little background. TNSR is the result of Netgate development, using many open source technologies to create a product that can be supported and easily implemented in production environments.

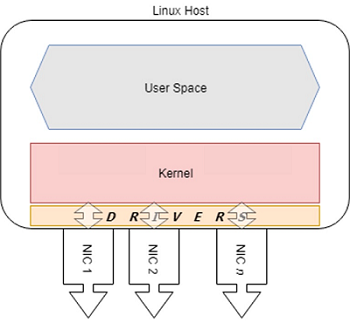

Without TNSR, Linux systems use drivers to plumb the connections from hardware interfaces (NICs) to the OS kernel. The Linux kernel then handles all I/O between these NICs. The kernel also handles all other I/O tasks, as well as memory and process management.

In high I/O situations, the kernel can be tasked with servicing millions of requests per second. TNSR uses two open source technologies to simplify this problem and service terabits of data in user space. Data Plane Development Kit (DPDK) bypasses the kernel, delivering network traffic directly to user space, and Vector Packet Processing (VPP) accelerates traffic processing.

In practical terms, this means that once a NIC is assigned to TNSR, that NIC is attached to a fast data plane, but it is no longer available to the host OS. All management - including configuration, troubleshooting and update - of TNSR is performed either at the console or via RESTCONF. In cloud or virtual environments, console access may be available, but the recommended configuration is still to dedicate a host OS interface for RESTCONF API access.

The recommended configuration of a TNSR system includes one host NIC for the host OS and all other NICs assigned to TNSR.

This is important and bears repeating:

The host OS cannot access NICs assigned to TNSR

In order to manage TNSR, administrators must be able to connect to the console

The host OS and TNSR use separate network namespaces to isolate their networking functions. Services on TNSR can run in the host OS namespace, the dataplane namespace, or both, depending on the nature of the service.

See also

See Networking Namespaces for more details.

Additional isolation is possible inside the dataplane using Virtual Routing and Forwarding (VRF). VRF sets up isolated L3 domains with alternate routing tables for specific interfaces and dynamic routing purposes.

See also

See Virtual Routing and Forwarding for more details.

Technology Stack¶

TNSR is designed and built from the ground up, using open source software projects including:

Vector Packet Processing (VPP)

Data Plane Developer Kit (DPDK)

YANG for data modeling

Clixon for system management

FRR for routing protocols

strongSwan for IPsec key management

Kea for DHCP services

net-snmp for SNMP

ntp.org daemon for NTP

Unbound for DNS

Ubuntu as the base operating system

See also

What is Vector Packet Processing? Vector processing handles more than one packet at a time, as opposed to scalar processing which handles packets individually. The vector approach fixes problems that scalar processing has with cache efficiency, read latency, and issues related to stack depth/misses.

For technical details on how VPP accomplishes this feat, see the VPP Wiki.

Basic Assumptions¶

This documentation assumes the reader has moderate to advanced networking knowledge and some familiarity with the Ubuntu Linux distribution.